Persistent Volumes

The Operator fully supports Couchbase Clusters running with persistent storage. This section details benefits and requirements in order to correctly function.

Benefits of Using Persistent Storage

All production deployments should be run with persistent volumes. At a very basic level this allows us to collect logs and diagnose any Couchbase Server issues. Due to the ephemeral nature of Kubernetes, without persistent volumes those logs would be gone forever in the event of a pod terminally crashing.

Using persistent storage also allows the Couchbase cluster to be tailored to the workload. Persistent volumes allow the selection of storage media type e.g. flash can be explicitly selected for high performance.

Persistent storage also makes the Couchbase cluster far more resilient. In a total disaster an ephemeral cluster cannot be recovered, the data is lost forever. With persistent storage the Operator can recover the cluster.

Recovery is faster with persistent storage. As data is persisted there is a high probability that a large percentage of data is still valid and can be reused. The Operator makes use of delta-node recovery to dramatically reduce rebalance times.

Storage Topologies

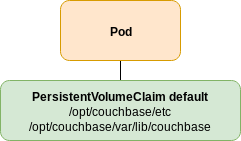

The most basic storage topology per node is show below:

This uses the couchbaseclusters.spec.servers.volumeMounts.default volume mount.

The volume has two sub volumes, one for configuration data that must be retained for Couchbase recovery, and one for data and logs.

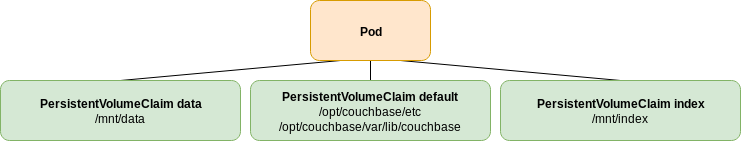

The Operator additionally allows more advanced configurations as shown below:

Your workload may require high performance disk I/O for data and index services, however configuration and logs may reside on cheaper storage media.

For this reason you can specify couchbaseclusters.spec.servers.volumeMounts.data, couchbaseclusters.spec.servers.volumeMounts.index and couchbaseclusters.spec.servers.volumeMounts.analytics volumes.

Supported Storage Classes

The Operator is designed to work with dynamically provisioned storage classes. While a Couchbase cluster can be configured to use other storage types they are not rigorously tested.

Couchbase Server pods may have different storage volumes associated with each service.

For example couchbaseclusters.spec.servers.volumeMounts.default, which is used for configuration and logging may be on cost effective storage whereas couchbaseclusters.spec.servers.volumeMounts.data may reside on high performance SSD backed storage.

In most cloud providers persistent volumes are scheduled across all possible availability zones. If these two volumes were provisioned in different availability zones then a pod that they were attached to could not be scheduled. Pods must reside in the same availability zone as their storage.

It is for this reason that the Operator requires lazily bound storage classes to function on Kubernetes clusters spread across multiple availability zones. When using lazy binding persistent volumes are not scheduled until after the pod is scheduled. Persistent volumes attached to a pod would then inherit its availability zone and provision correctly.

Please refer to the storage class how-to guide to configure lazy bound storage classes.

|

Some storage providers or platforms may work transparently across availability zones. If this is the case then you may use any existing storage class. Please consult with your Kubernetes vendor to confirm. |

Storage Reclaim Policy

The storage reclaim policy related to a storage class in use by the Operator is largely irrelevant.

The Operator uses PersistentVolumeClaims to keep storage volumes alive.

Our recommendation is to use the Delete policy.

Operator volume names are based on the cluster name, and therefore deleting and recreating a cluster with the same name may alias resource names and cause provisioning failure when a retaining policy is in use.

Using Storage Classes

Couchbase Server pods never run as root. Persistent storage mounts, by default, are mounted as root. This means that Couchbase Server pods are unable to write to the persistent volume.

In order to allow Couchbase Server to write to the persistent storage you must specify a file system group to mount the persistent volume as. This mounts the persistent volume so the Couchbase Server user is able to write to the persistent volume.

The couchbaseclusters.spec.securityContext.fsGroup parameter allows this to be explicitly set.

On Kubernetes the file system group can be any non-zero value (we use 1000 internally for testing).

On Red Hat OpenShift you do not need to populate this field.

Local Persistent Volumes

The Couchbase Operator and CouchbaseCluster resources can be configured to utilize local persistent volumes (LPV).

Using LPV comes with risks and should be fully understood before attempting to run this configuration in a production cluster.

The first consideration is that upgrade is more resource intensive than using standard persistent volumes. The reason is that the Operator is unable to rely on an LPV Provisioner to clean up unused volumes. Since volumes are typically solely bound to a pod, this means that you will need N additional volumes (where N is the number of pods) as we create the replacement pods.

The second consideration is that most management of LPV is a manual task performed at the K8S Node level. It is up to the K8S Administrator to maintain these LPV for usage with the Couchbase Cluster.

Lastly, High Availability is impacted if a K8S node goes offline. If the node (and pod) disappears, likely the PV will as well. This will cause issues, as the operator will attempt to replace the pod and reuse the PVC. Obviously this will not work and will require human intervention to correct. The administrator will need to restore the K8S node and PV, or pause the operator and remove the afflicted pod from the cluster (and delete associated persistent volumes and persistent volume claims) and then unpause the operator.

To utilize Local Persistent Volumes, you must first install a provisioner for your platform and create a storage class for the Local Volumes.

Once you have your created Persistent Volumes and a Storage class that references these Persistent Volumes, you can create a volume mount template to reference this storage class.

apiVersion: couchbase.com/v2

kind: CouchbaseCluster

metadata:

name: my-cluster

spec:

volumeClaimTemplates:

- metadata:

name: local

annotations:

"storage.couchbase.com/local": true

spec:

storageClassName: "local-disk"

resources:

requests:

storage: 50Gi

- metadata:

name: logging

spec:

storageClassName: "standard"

resources:

requests:

storage: 5GiBy adding the storage.couchbase.com/local annotation, this informs Operator that references to this template on a single pod should all be mounted inside a single PV. This simplifies setting up your LPV by allowing you to allocate an entire disk for a PV.

This would allow you to specify the volumes for a server group as such:

spec:

image: couchbase/server:7.1.4

security:

adminSecret: cb-example-auth

buckets:

managed: true

servers:

- size: 3

name: all_services

services:

- data

- index

- query

- search

- eventing

- analytics

volumeMounts:

logging: logging

data: local

index: local

analytics:

- local

- local

volumeClaimTemplates:

- metadata:

name: local

annotations:

"storage.couchbase.com/local": true

spec:

storageClassName: "local-disk"

resources:

requests:

storage: 50Gi

- metadata:

name: logging

spec:

storageClassName: "standard"

resources:

requests:

storage: 5GiThis would place all "local" volumes onto a single persistent volume claim (PVC) mounted under sub-directories, while the logging would be placed in its own PVC.