Couchbase Networking

Connecting to a Couchbase cluster in Kubernetes is challenging. This section outlines supported strategies and key concepts.

Couchbase Server is a high performance database, where data is distributed across all pods in a cluster.

Clients are aware of which pod a data item should reside on and perform client-side load balancing.

By performing the load balancing in the client, this avoids unnecessary network hops and improves performance.

For this reason Couchbase Server cannot be accessed using normal Kubernetes Service or Ingress resources,

unless accessed via Cloud Native Gateway (CNG).

The following options depict the possible networking topologies for connecting clients to a Couchbase cluster. Clients includes all Couchbase Client SDKs, Couchbase Mobile and Couchbase XDCR connections unless otherwise stated.

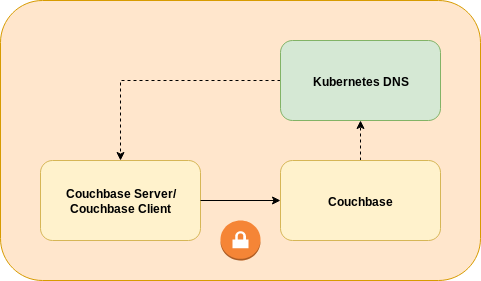

Intra-Kubernetes Networking

Intra-Kubernetes networking is the simplest and allow clients running in the same Kubernetes cluster as the Couchbase server instance. This method of communication can be used by any client.

The client can use endpoint DNS entries to connect to individual Couchbase nodes. Stable service discovery is provided by SRV records. TLS can be used to secure communications.

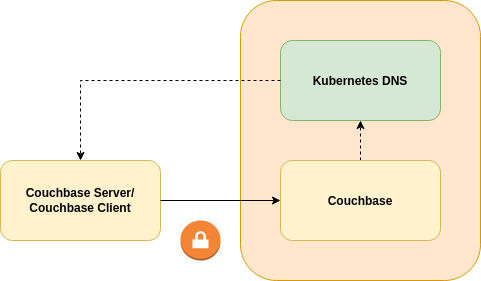

Inter-Kubernetes Networking with Forwarded DNS

Inter-Kubernetes networking allows clients to connect to Couchbase server instances in a remote Kubernetes cluster. It uses the DNS service offered by the remote Kubernetes cluster to provide addressing.

A local DNS server is used by Couchbase Server and clients to forward DNS requests for a remote namespace to a remote Kubernetes cluster. Requests that do not fall into this DNS zone are forwarded to the local DNS service. Any DNS server that supports forwarding of zones to remote DNS servers can be used, but we recommend CoreDNS as the de facto cloud native standard.

This method of communication can only be used if clients in one cluster can communicate with pods in another — it uses routed networking. For example:

-

Google GKE allows multiple Kubernetes clusters in the same virtual private cloud, and has routed networking by default.

-

Amazon AWS can use the VPC CNI plugin to create routed networks that can be peered together.

The client can use endpoint DNS entries to connect to individual Couchbase nodes. Stable service discovery is provided by SRV records. TLS can be used to secure communications.

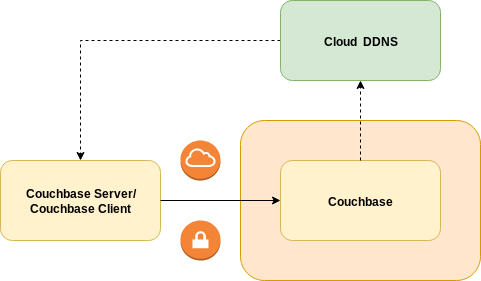

Public Networking with External DNS

Public networking allows clients to connect to Couchbase server instances from anywhere with an Internet connection.

External DNS is a service that can be run in a Kubernetes namespace. It allows services to advertise load-balancer service public IP addresses with public DDNS services. This networking type requires Exposed Features, which has its own client requirements and limitations.

The client can use DNS names for load-balancer service public IP addresses to connect to individual Couchbase nodes. Stable service discovery is provided by a load-balanced HTTP connection to the cluster admin service. TLS must be used to secure communications.

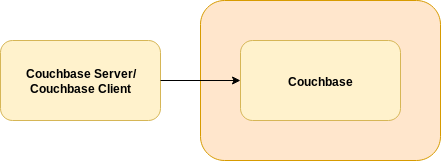

Generic Networking

Generic networking use is discouraged for production deployments. It should be avoided in preference for one of the prior methods of communication. This networking type requires Exposed Features, which has its own client requirements and limitations.

The client uses Kubernetes node ports to connect to individual Couchbase nodes. Stable service discovery is not possible. TLS cannot be used to secure communications.

|

When using Istio or another service mesh, remember that strict mode mTLS cannot be used with Kubernetes node ports. |

Exposed Features

Both Public Networking with External DNS and Generic Networking require client traffic to cross as DNAT boundary. In both cases clients connect to different IP addresses than those of the underlying Couchbase pods. As a result when clients initially contact Couchbase Server to get a map of nodes and their hostnames then Operator must override the internal Kubernetes DNS names.

Setting couchbaseclusters.spec.networking.exposedFeatures when creating a Couchbase cluster will instruct the Operator to override node mappings so that clients can connect to the correct IP address that a client can connect to.

It will also cause the Operator to create separate services that are visible external to the Kubernetes cluster.

Additionally couchbaseclusters.spec.networking.dns.domain is specified when using Public Networking with External DNS, populating the cluster node maps with DNS names and annotating the services so External DNS can replicate this to a cloud DDNS service provider.

Supported Client Versions for use with Exposed Features

As Public Networking with External DNS and Generic Networking require exposed features, clients need to be able to support this feature. The minimum versions are specified below.

| Client | Version | ||

|---|---|---|---|

Couchbase Server (XDCR) |

6.0.1+ 5.5.3+ |

||

Node.js SDK |

2.5.0+

|

||

PHP SDK |

Any supported version using |

||

Python SDK |

Any supported version using |

||

C SDK (a.k.a. |

2.9.2+ |

||

Java SDK |

2.7.7+ |

||

.NET SDK |

2.7.9+ |

||

Go SDK |

1.6.1+ |

||

Couchbase Sync Gateway |

2.7.0+ * *Earlier versions of Sync Gateway have limited support for exposed features. For more information, refer to Sync Gateway Limitations When Using Exposed Features. |

||

Couchbase Elasticsearch Connector |

4.1.0+ |

||

Couchbase Kafka Connector |

3.4.5+ |

| A known issue exists (K8S-1585) where lookup may fail when using DNS SRV over TLS to connect to a Couchbase Cluster in the same Kubernetes cluster. In such cases, the workaround is to add wildcard matches to the Subject Alternate Names (SANs) as discussed in the Creating TLS Certificates tutorial. |

Sync Gateway Limitations When Using Exposed Features

Earlier versions of Sync Gateway can experience certain network limitations when connecting to a Couchbase cluster that is configured with exposed features. Table 2 describes the different network limitations that can occur based on the version of Sync Gateway that is being used.

Sync Gateway, like other Couchbase clients, does not require exposed features to be configured in order to establish a network connection with an instance of Couchbase Server that is running on the same local Kubernetes cluster.

The rows labeled Local in Table 2 assume that you already have exposed features configured for a different purpose, e.g. exposing the admin port for remote administration, connecting to a remote cluster for XDCR, etc.

However, if you are running Sync Gateway on the same Kubernetes cluster as Couchbase Server, and there is nothing else requiring you to configure couchbaseclusters.spec.networking.exposedFeatures for a different purpose, then you can ignore the rest of this section as this issue will not affect you.

|

| Sync Gateway Version | Relationship to Cluster | Method | Connection String | High Availability |

|---|---|---|---|---|

2.8.2+ |

Local |

DNS SRV |

|

✅ Yes |

Remote |

DNS SRV |

|

✅ Yes |

|

2.8.0, |

Local |

DNS address |

|

🚫 No |

Remote |

DNS SRV |

|

✅ Yes |

|

⇐ 2.7.2 |

Local |

DNS address |

|

🚫 No |

Remote |

Round-robin DNS |

|

⚠️ Yes |

In Table 2 above, the Relationship to Cluster column indicates the location of the Sync Gateway cluster in relation to the Couchbase cluster that is being managed by the Autonomous Operator. Local refers to instances where Sync Gateway is deployed in the same Kubernetes cluster where Couchbase Server is running (see Intra-Kubernetes Networking and Inter-Kubernetes Networking with Forwarded DNS). Remote refers to instances where Sync Gateway is deployed outside of the Kubernetes cluster where Couchbase Server is running (see Public Networking with External DNS).

-

Sync Gateway 2.8.2 and higher do not experience any connection issues related to exposed features as these versions have full support for DNS SRV lookup and support explicit network selection. Both Local and Remote connections can be configured in accordance with the standard client connection documentation.

-

Sync Gateway 2.7.3 and 2.8.0 introduced DNS SRV lookup for service discovery, however the automatic network selection behavior of Sync Gateway incorrectly directs traffic to "external" interfaces when running within the same Kubernetes cluster as the target Couchbase cluster.

-

Remote connections still work as expected and can be configured in accordance with the standard client connection documentation.

-

Local connections do not work as expected, and Sync Gateway ends up selecting the "external" network interface. This causes network traffic to be sent through a load balancer, which can lead to significant financial costs with a cloud provider.

If you intend to use a Local connection, you can choose to mitigate the issue by connecting using only DNS addresses. This method requires that the connection string contain a list of the hostnames of all the Couchbase Server pods in the Couchbase cluster. This method of connectivity is highly discouraged, as it is not tolerant to Couchbase cluster pod topology changes. (Sync Gateway will be informed of any topology changes so long as it stays running. However, once it restarts, it will fail to reconnect because the connection string has the old topology.)

-

-

Sync Gateway 2.7.2 and lower don’t feature DNS SRV support at all, instead falling back to a DNS address record lookup. Local connections have the same limitations as they do in versions 2.7.3 and 2.8.0 above. Remote connections support high availability through round-robin DNS. (Note, however, that since this connection method runs over HTTP on port 8091, there is a potential risk of a denial of service should port 8091 ever experience too many connections at once.)